MNIST

In this demo, we show how to train a convolutional neural network to classify images of handwritten digits.

Training

The demo is entirely designed via the demos/mnist/mnist.conf configuration file. Hence no coding is required to create it. The archicture is defined via the 'arch' variable.

To train the demo, follow these steps:

- Download and extract the MNIST dataset from here: MNIST website

- Modify the 'root' variable in your mnist.conf to point to your MNIST directory.

- Call:

train mnist.conf

After 5 iterations, the 'test_correct' should converge around 95% correct. You can tune the configuration to obtain a better score, e.g. 'eta' and 'reg' variables. In particular, this demo only uses 2000 samples for training

and 1000 for testing for speed sake. Comment the 'train_size' and 'val_size' variables to use the entire dataset.

Classification / Detection

To run your trained network on single 28×28 MNIST images, you just need to:

- Update the “weights” variables with your saved weights in your mnist.conf:

weights = mnist_net00005.mat

- Call:

detect mnist.conf

Note: when “scaling_type” equals 4, detect uses the original input image as single-scale, hence is equivalent to classification if the input image is a 28×28 MNIST image. One can run in “true” detection mode by setting other scaling types (see detect for more information), however since this network is not trained with an extra “background” class, positives answers will be given at every location and scale, which is not desirable.

Note: when using detect, one can set “next_on_key” variable to “n” for example, the display will then pause after processing each image, until the user presses the “n” key (when focus is on the main window).

Visualization

When enabling visualization (see 'show_*' variables in mnist.conf), the following windows will appear during training.

Below are displayed the first 100 samples of the MNIST testing dataset with groundtruth on the left, correct and incorrect answers in the middle (incorrect are boxed but none are here in the first 100 samples) and incorrect only samples on the right.

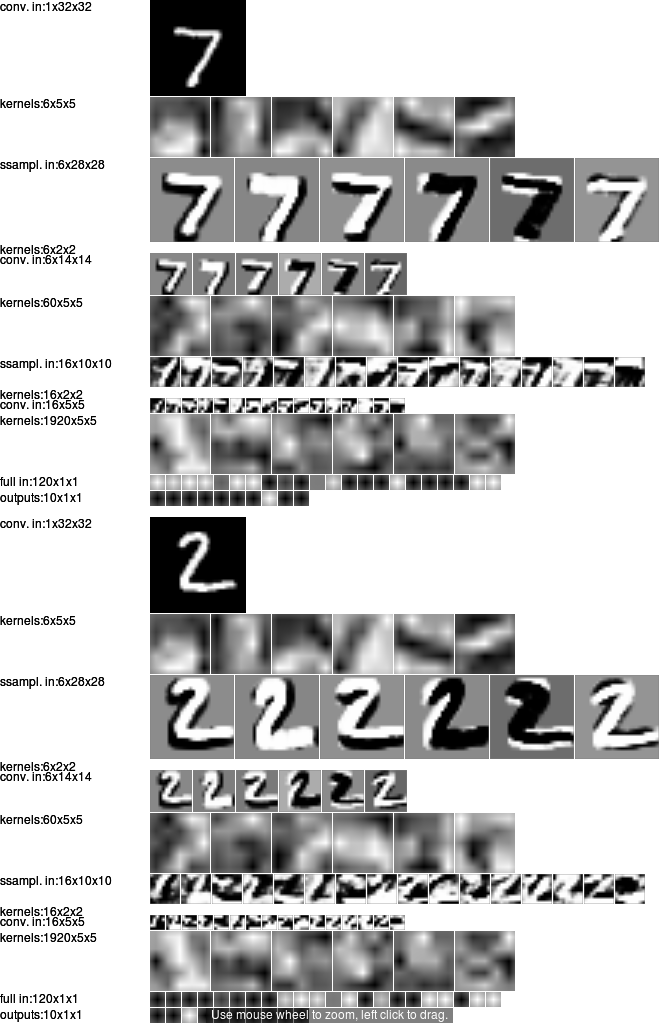

Below is an example of internal states while forward-processing two examples, before training.

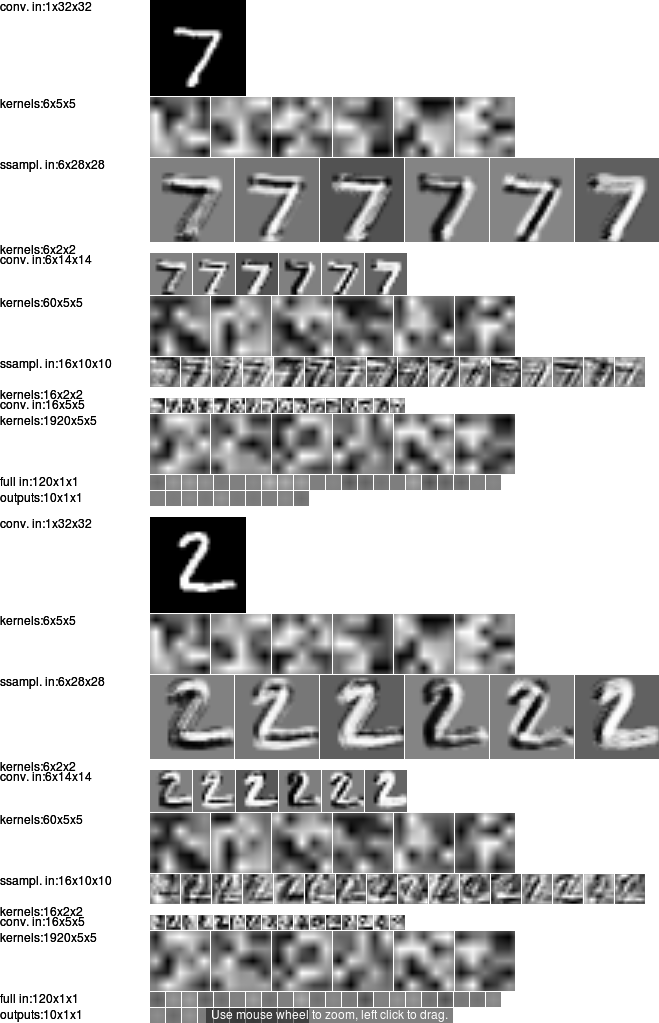

Below is an example of internal states while forward-processing two examples, after training.