Face detection

This face detection demo was trained using a cropped version of the Labeled Faces in the Wild face dataset.

Compiling

This demo uses the generic detection tool (see eblearn/tools/tools/src/detect.cpp).

See instructions to compile the 'detect' (multi-threaded) or 'stdetect' (single-threaded) project.

Under Linux or Mac, simply call:

make detect

To print more details about the internal operations of detect, use detect_debug (make detect_debug).

Detecting

To run the demo on a webcam, call from the eblearn directory: bin/detect demos/face/best_cam.conf

Note that processing time can be improved by installing the Intel IPP libraries for Intel processors (see installation instructions).

To run the demo on a folder of images, run from the eblearn directory: bin/detect demos/face/best_dir.conf your-image-folder

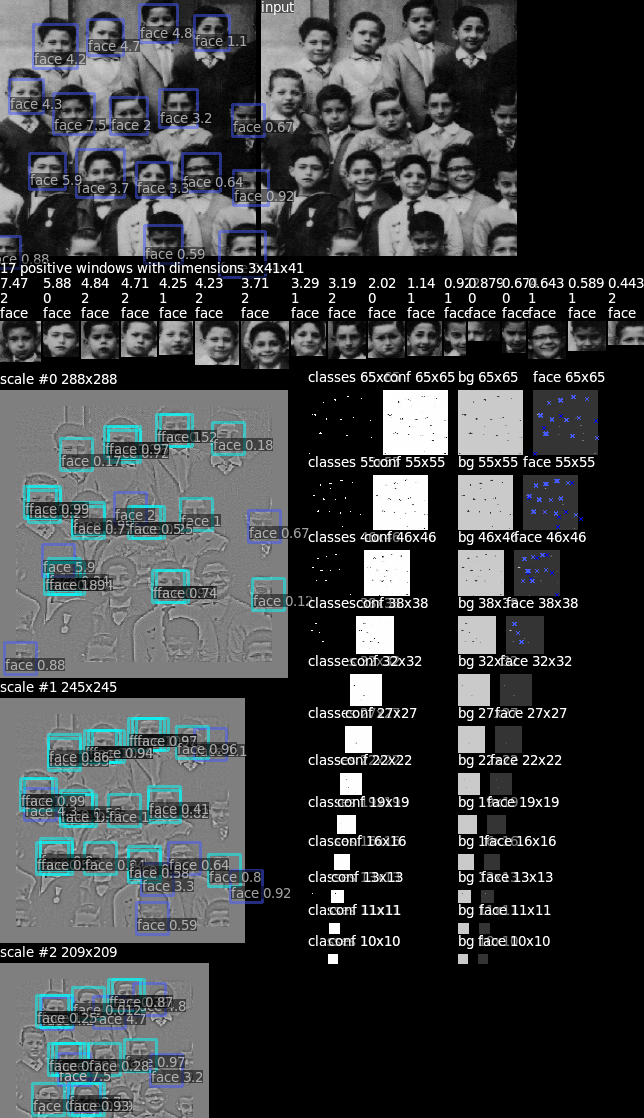

Below are the details of multi-scale detection as displayed when using “display_threads = 1”. From top to bottom, we display the following:

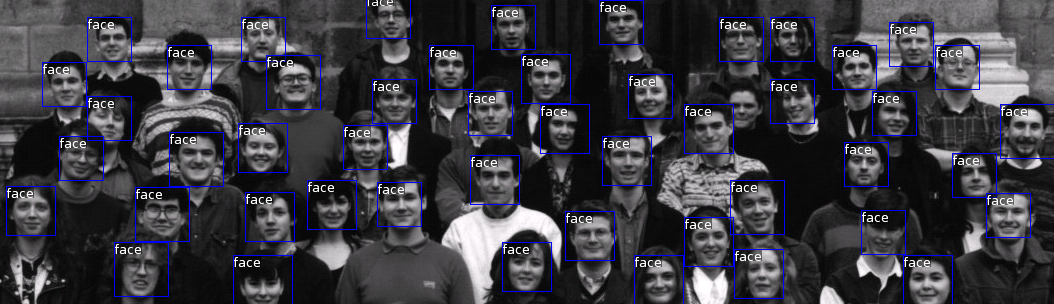

- Image with final bounding box answers and their confidence (higher is more confident).

- Each positive input is listed with some details from top to bottom: confidence, scale at which it was detected and class name.

- Inputs and outputs of each scale. From left to right:

- preprocessed scale input and each detection (light blue) contributing to a final voted detection (navy blue)

- the discrete class map (here negative is 0, i.e. black, and positive is 1, i.e. white)

- the confidence map (white is very confident, black is not confident)

- the raw output map of each class, first one being the background class “bg”, and second one is the face class. The output pixels corresponding to positive answers and marked with blue crosses in the output maps.

Tweaking parameters

The parameters of the demo can be tweaked by changing variables in the .conf file. Adding variables at the end of the file will override any variable defined earlier.

Main tweakable parameters

- camera = {directory,video,opencv,shmem}, this variable defines the type of input ('your-data-input'). Possible values are:

- directory: This will process all images found recursively in the directory given as second argument to detect.

- video: This will process all frames found in video file specified as second argument to detect and encode the output frames as a new video if 'save_video' is set.

- opencv: This is taking input frames from your webcam, using opencv to connect to the webcam (requires opencv).

- shmem: Grab video frames in a shared memory.

- windows: webcam under Windows

- v4l2: v4l2 Linux camera

- Kinect: Kinect camera

- threshold = [double, from -1.0 to 1.0], outputs lower than this threshold are ignored.

- scaling = [double, > 1.0], sets the scaling ratio between each scale of the multi-resolution detector. A lower scaling ratio will prevent more false negatives, but will be more computationally expensive.

- nthreads = [interger], sets the number of detection threads. Increasing the number of threads will not increase the latency of the detection, but will increase the frequency, yielding a smoother video with the 'webcam' setting, or processing more images in the 'directory' setting. Change variable 'nthreads' to the number of free CPUs available on your machine.

Other tweakable parameters

- input_max = [integer], limits the input size to nxn.

- save_detections = {0,1}, saves detected regions (both original and preprocessed version) into directory 'detections_[date]' if equal to 1.

- save_video = {0,1}, saves processed frames and encode them into a video into directory 'video_[date]' if equal to 1.

- save_video_fps = [integer], sets the number of frames per second in the output video.

- input_video_max_duration = [integer], when camera=video, process only the first n seconds of the video.

- input_video_sstep = [integer], when camera=video, process only frames every n seconds.

Training

Prepare the dataset

Call the dataset preparation script (uncomment region as you need, fetching data and compiling non-face samples is currently commented): ./face_dsprepare.sh

Compile trainer

See instructions to compile the 'train' project (see eblearn/tools/tools/src/train.cpp).

Under Linux, to compile this project only, simply call from the tools directory: make release prj=train

You can also compile the meta trainer which generates multiple jobs based on the multiple-value variables in face.conf and reports results by email. Under Linux, call: make release prj=metarun

Train

Modify training parameters in eblearn/tools/demos/face/face.conf as necessary. Variables can accept multiple values, the meta trainer will produce multiple configurations and email you the must successful combination.

Then start training with: ./bin/train tools/demos/face/face.conf

Or ./bin/metarun tools/demos/face/face.conf if multiple configurations are contained in the configuration file.

Train on false positives

It is important to train on false positives to get rid of false detections. Call the 'face_train.sh' script to automatically run multiple passes of false positives training.