ebl::full_layer< T, Tstate > Class Template Reference

#include <ebl_layers.h>

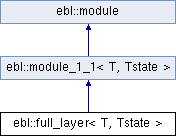

Inheritance diagram for ebl::full_layer< T, Tstate >:

Public Member Functions | |

| full_layer (parameter< T, Tstate > *p, intg indim0, intg noutputs, bool tanh=true, const char *name="full_layer") | |

| virtual | ~full_layer () |

| Destructor. | |

| void | fprop (Tstate &in, Tstate &out) |

| fprop from in to out | |

| void | bprop (Tstate &in, Tstate &out) |

| bprop | |

| void | bbprop (Tstate &in, Tstate &out) |

| bbprop | |

| void | forget (forget_param_linear &fp) |

| initialize the weights to random values | |

| virtual fidxdim | fprop_size (fidxdim &i_size) |

| virtual idxdim | bprop_size (const idxdim &o_size) |

| virtual full_layer< T, Tstate > * | copy () |

| Returns a deep copy of this module. | |

| virtual std::string | describe () |

| Returns a string describing this module and its parameters. | |

Public Attributes | |

| linear_module< T, Tstate > | linear |

| linear module for weight | |

| addc_module< T, Tstate > | adder |

| bias vector | |

| module_1_1< T, Tstate > * | sigmoid |

| the non-linear function | |

| Tstate * | sum |

| weighted sum | |

Detailed Description

template<typename T, class Tstate = bbstate_idx<T>>

class ebl::full_layer< T, Tstate >

a simple fully-connected neural net layer: linear + tanh non-linearity.

Constructor & Destructor Documentation

template<typename T , class Tstate >

| ebl::full_layer< T, Tstate >::full_layer | ( | parameter< T, Tstate > * | p, |

| intg | indim0, | ||

| intg | noutputs, | ||

| bool | tanh = true, |

||

| const char * | name = "full_layer< T, Tstate >" |

||

| ) |

Constructor. Arguments are a pointer to a parameter in which the trainable weights will be appended, the number of inputs, and the number of outputs.

- Parameters:

-

indim0 The number of inputs noutputs The number of outputs. tanh If true, use tanh squasher, stdsigmoid otherwise.

Member Function Documentation

template<typename T , class Tstate >

| idxdim ebl::full_layer< T, Tstate >::bprop_size | ( | const idxdim & | o_size | ) | [virtual] |

Return dimensions compatible with this module given output dimensions. See module_1_1_gen's documentation for more details.

template<typename T , class Tstate >

| fidxdim ebl::full_layer< T, Tstate >::fprop_size | ( | fidxdim & | i_size | ) | [virtual] |

Return dimensions that are compatible with this module. See module_1_1_gen's documentation for more details.

Reimplemented from ebl::module_1_1< T, Tstate >.

The documentation for this class was generated from the following files:

- /home/rex/ebltrunk/core/libeblearn/include/ebl_layers.h

- /home/rex/ebltrunk/core/libeblearn/include/ebl_layers.hpp