#include <ebl_normalization.h>

Public Member Functions | |

| subtractive_norm_module (idxdim &kerdim, int nf, bool mirror=false, bool global_norm=false, parameter< T, Tstate > *p=NULL, const char *name="subtractive_norm", bool across_features=true, double cgauss=2.0, bool fsum_div=false, float fsum_split=1.0) | |

| virtual | ~subtractive_norm_module () |

| destructor | |

| virtual void | fprop (Tstate &in, Tstate &out) |

| forward propagation from in to out | |

| virtual void | bprop (Tstate &in, Tstate &out) |

| backward propagation from out to in | |

| virtual void | bbprop (Tstate &in, Tstate &out) |

| second-derivative backward propagation from out to in | |

| virtual void | dump_fprop (Tstate &in, Tstate &out) |

| virtual subtractive_norm_module< T, Tstate > * | copy (parameter< T, Tstate > *p=NULL) |

| virtual bool | optimize_fprop (Tstate &in, Tstate &out) |

| virtual std::string | describe () |

| Returns a string describing this module and its parameters. | |

Protected Attributes | |

| parameter< T, Tstate > * | param |

| layers< T, Tstate > | convmean |

| convolution_module< T, Tstate > * | meanconv |

| module_1_1< T, Tstate > * | padding |

| idx< T > | w |

| weights | |

| diff_module< T, Tstate > | difmod |

| difference module | |

| Tstate | inmean |

| bool | global_norm |

| global norm first | |

| int | nfeatures |

| idxdim | kerdim |

| bool | across_features |

| Norm across feats. | |

| bool | mirror |

| mirror input or not. | |

Friends | |

| class | contrast_norm_module_gui |

| class | contrast_norm_module |

Detailed Description

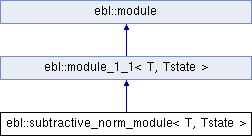

template<typename T, class Tstate = bbstate_idx<T>>

class ebl::subtractive_norm_module< T, Tstate >

Subtractive normalization operation using a weighted expectation over a local neighborhood. An input set of feature maps is locally normalized to be zero mean.

Constructor & Destructor Documentation

| ebl::subtractive_norm_module< T, Tstate >::subtractive_norm_module | ( | idxdim & | kerdim, |

| int | nf, | ||

| bool | mirror = false, |

||

| bool | global_norm = false, |

||

| parameter< T, Tstate > * | p = NULL, |

||

| const char * | name = "subtractive_norm", |

||

| bool | across_features = true, |

||

| double | cgauss = 2.0, |

||

| bool | fsum_div = false, |

||

| float | fsum_split = 1.0 |

||

| ) |

- Parameters:

-

kerdim The kernel dimensions. nf The number of feature maps input to this module. mirror Use mirroring of the input to pad border if true, or use zero-padding otherwise (default). global_norm If true, apply global normalization first. p If specified, parameter p holds learned weights. across_features If true, normalize across feature dimensions in addition to spatial dimensions. learn_mean If true, learn mean weighting. cgauss Gaussian kernel coefficient.

normalize the kernel

feature sum module to sum along features this might be implemented by making the table in above conv module all to all connection, but that would be very inefficient

Member Function Documentation

| void ebl::subtractive_norm_module< T, Tstate >::bbprop | ( | Tstate & | in, |

| Tstate & | out | ||

| ) | [virtual] |

second-derivative backward propagation from out to in

in - mean

sum_j (w_j * in_j)

Reimplemented from ebl::module_1_1< T, Tstate >.

| subtractive_norm_module< T, Tstate > * ebl::subtractive_norm_module< T, Tstate >::copy | ( | parameter< T, Tstate > * | p = NULL | ) | [virtual] |

Returns a deep copy of this module.

- Parameters:

-

p If NULL, reuse current parameter space, otherwise allocate new weights on parameter 'p'.

Reimplemented from ebl::module_1_1< T, Tstate >.

| void ebl::subtractive_norm_module< T, Tstate >::dump_fprop | ( | Tstate & | in, |

| Tstate & | out | ||

| ) | [virtual] |

Calls fprop and then dumps internal buffers, inputs and outputs into files. This can be useful for debugging.

Reimplemented from ebl::module_1_1< T, Tstate >.

| bool ebl::subtractive_norm_module< T, Tstate >::optimize_fprop | ( | Tstate & | in, |

| Tstate & | out | ||

| ) | [virtual] |

Pre-determine the order of hidden buffers to use only 2 buffers in order to reduce memory footprint. This returns true if outputs is actually put in out, false if it's in in.

Reimplemented from ebl::module_1_1< T, Tstate >.

The documentation for this class was generated from the following files:

- /home/rex/ebltrunk/core/libeblearn/include/ebl_normalization.h

- /home/rex/ebltrunk/core/libeblearn/include/ebl_normalization.hpp